this post is an edited, public version of a DRAFT internal discussion

Preamble

This blog post is intended to bring together topics, facts, considerations and other useful information pertaining to upcoming licensing decisions on full-stack software. It is assumed that all license decisions must be made prior to hand-off of the works to the contract originator. All license decisions must exist in writing.

As noted below, some person or entity must have signature authority to enact these license decisions. Subsequently in the project life cycle, it is recommended that a project steering committee be formed with the authority to make or reject further changes.

note:

· not all components must have the same license

· dual licensing of GPL and non-GPL is possible (more on this later)

· this is a data-driven project – data (a lot) is the origin and outputs data sets require their own licenses in any event because GPL and other OSS licenses apply to source code, not data

· the means to transform data into a base load, into a DOCUMENT, and read the outputs are code, and must be licensed

The deliverable to the contract originator is “—-” (confirm)

The Open Source Movement

Major software initiatives using an Open Source model have flourished in an unprecedented success over the last 15 years, with names like GNU, Linux, BSD Unix, Apache and Mozilla. If the ability of software developers to contribute to common source code, and to form functional communities focused on continuous improvements to their projects is the engine, then the application of standardized licensing to source code (and therefore to new contributions) is what keeps that engine on track.

Open Source by Definition

redistribution is permitted

source code is available

licensee may make derived works

see OSI

I What is in a license?

First Principles – Copyright

copyright is held by the party who commissioned the work

passing through the contractor to the contract originator

Rights of the User of Open Source Software

Distribution Rights

– who can copy the code (possession)

– who can deploy the code (run an instance of the software)

– what rights do users have with the copier/deployer (right to obtain source)

Modification Rights

– who can modify this code

– who can distribute their modifications, under what terms?

– what responsibilities do distributors of modifications have ?

Generational Limitation

Once a copy of the source code has been modified, what limitations apply to the license for distributed, changed copies? Specifically, can code be changed from an open license to a less-open license (“go closed”), or not? Must the brand / origin be displayed ? Is a party that modifies the project required to publish their changes ?

Source Code License Parts

The licensor asserts explicitly or implicitly, that they have the right to license this source code

licensor states warrenties and disclaimers

warranty of merchantability

warranty of fitness for a particular purpose

warranty against infringement

either grants protection against patent infringement or not

licensor explicitly states rights granted to the licensee

including what grants the licensee may make to further licensees

II Source Code Licenses

We do not want to define a custom license – there are enough to choose from!

Google on Licenses Blog · OSI The Open Source Initiative

Mozilla Public License – http://www.mozilla.org/MPL/

“The MPL fills a useful space in the spectrum of free and open source software licenses, sitting between the Apache license, which does not require modifications to be shared, and the GNU family of licenses, which requires modifications to be shared under a much broader set of circumstances than the MPL.” – http://www.mozilla.org/MPL/2.0/FAQ.html

(from Wikipedia)

… The MPL allows covered source code to be mixed with other

files under a different, even proprietary license. However, code files licensed under

the MPL must remain under the MPL and freely available in source form. This makes

the MPL a compromise between the MIT or BSD licenses, which permit all derived works

to be relicensed as proprietary, and the GPL, which requires the whole of a derived work, even new components, to remain under the GPL. By allowing proprietary modules in derived projects while requiring core files to remain open source, the MPL is designed to motivate both businesses and the open-source community to help develop core software.

The rights granted by the Mozilla Public License are primarily defined as passing from “contributers,” who create or modify source code, to the licensee. In the absence of patents, MPL-licensed code can be freely used, altered, and redistributed. Versions with patented code can still be used, transferred, and even sold, but cannot be altered withoutspecial permission. In addition, the MPL does not grant the licensee any rights to a contributor’s trademarks.

To fulfill the terms of the MPL, the licensee must meet certain “responsibilities,”

mostly concerning the distribution of licensed software. The licensee must ensure access to or provide all source code files covered by the MPL, even if the software is offered as an executable or combined with other code under a proprietary license. The one exception to covered files remaining under the MPL occurs when they are combined with code under the GPL, Lesser GPL (LGPL), or Affero GPL (AGPL). In this case, the creator of the combined software can choose to provide the entire work under the stricter GPL-based licenses.

The MPL has been approved as both a free software license (albeit one with a

weak copyleft) by the Free Software Foundation[3] and an open-source software license by the Open Source Initiative.[4]

[3] “Mozilla Public License (MPL) version 2.0”. Various Licenses and Comments about Them.

Free Software Foundation. Retrieved 2012-01-03.

[4] “Open Source Licenses”. Open Source Initiative. Retrieved 2012-01-07.

MIT, BSD, Apache Licenses

“… allow code to be used in proprietary software and do not require that open

source versions of the code be distributed. Code created under these licenses, or

derived from such code, may go ‘closed’ and developments can be made under that

proprietary license, which are lost to the open source community.

”

It is worth considering the Apache v2 license, or the Academic Free License here, since they are more recent that the original BSD and MIT/X, and contain some refinements

Non-lawyer Brian says :

These licenses DO NOT prevent a competitor to FIRM from taking a complete project, making source code changes that are not published, and advertising the product as their own, as long as certain notices are retained within the code.

“In short, research-style licenses, like the BSD and MIT Licenses, are ideal for

situations in which you want wide deployment of your ideas and do not care whether

this results in open source software or proprietary software.”

Apache Software License (ASL)

see ArsTechnica on Google GPL ASL HERE

“ASL, which is widely used in the open-source software community and has been

approved by the Open Source Initiative, is a permissive license that is conducive to

commercial development and proprietary redistribution. Code that is distributed

under the ASL and other permissive licenses can be integrated into closed-source

proprietary products and redistributed under a broad variety of other terms.

Unlike permissive open-source licenses, “copyleft” licenses (such as the GPL)

generally impose restrictions on redistribution of code in order to ensure that

modifications and derivatives are kept open and distributed under similar terms.

Permissive licenses like the ASL and BSD license are preferred by many companies

because such licenses make it possible to use open-source software code without

having to turn proprietary enhancements back over to the open source software

community. These licenses encourage commercial adoption of open-source software

because they make it possible for companies to profit from investing in enhancements

made to existing open-source software solutions.”

OSI 4

The license must explicitly permit distribution of software built from modified

source code. The license may require derived works to carry a different name or

version number from the original software.

GPL, GPLv2, LGPL

These licenses are the most prescriptive on the rights of those that modify and

adopt the project software.

GPL

Software can be distributed and modified without additional permission of the licensor. This imposes a mirror-image restriction on the licensee: while the licensee has free access to the licensed work, the licensee must distribute any derivative works subject to the same limitations and restrictions as the licensed work. Derivative works must be licensed under the GPL and be subject to all of its conditions.

see GNU Software -LINK-

GPLv3

Introduced in 2007 – GPL Definitions -LINK- An essay discusses the differences in GPLv3. -LINK- The primary goal appears to prevent certain kinds of modification (“Tivoization”)

Brian says: I do not believe that any open source license can restrict the use cases of the software, including commercial use. So there is no such thing as “GPLv3 non-commercial” see-LINK- ; “No OSI-certified Open Source license (or FSF-approved Free Software license) will restrict commercial use…” David Thornley, 30dec10

GPLv2+ is modified v2 to be compatible with v3

LGPL

A key difference in the LGPL is that is does not restrict the ability of a library to “link” to othe libraries.. (referring to compiled-code architecture) The GPL wording is such that a GPL licensed code project cannot be linked to non-GPL code, but an LGPL library can.. Stallman discusses an implication of this here

see GNU Why not LGPL -LINK-

Python Software Foundation License

The license for python, itself. -LINK-

from wikipedia:

The Python Software Foundation License (PSFL) is a BSD-style, permissive free software license which is compatible with the GNU General Public License (GPL). Its primary use is for distribution of the Python project software. Unlike the GPL the Python license is not a copyleft license, and allows modifications to the source code, as well as the construction of derivative works, without making the code open-source. The PSFL is listed as approved on both FSF’s approved licenses list, and OSI’s approved licenses list.

Source code written in python can not be hidden while it is running. -LINK-

III Data Licenses

This topic remains contentious and is still evolving rapidly. Drivers of change include new technology, new social norms and new markets.

Creative Commons

A quick web tool to assist in choosing a license -LINK-

Example — given that modifications are permissible as long as they are published, and

if commerical use of the datasets are permissable

Creative Commons Attribution-ShareAlike 3.0 United States License -LINK-

or, if commercial use of the datasets is not permissable

Creative Commons Attribution-NonCommercial-ShareAlike 3.0 United States License -LINK-

An introduction to an open data in general HERE

Open Data Commons.org

The Open Data Commons appears to be expanding -LINK-. Their

license appears to be an approximation of the GPL for data. HERE

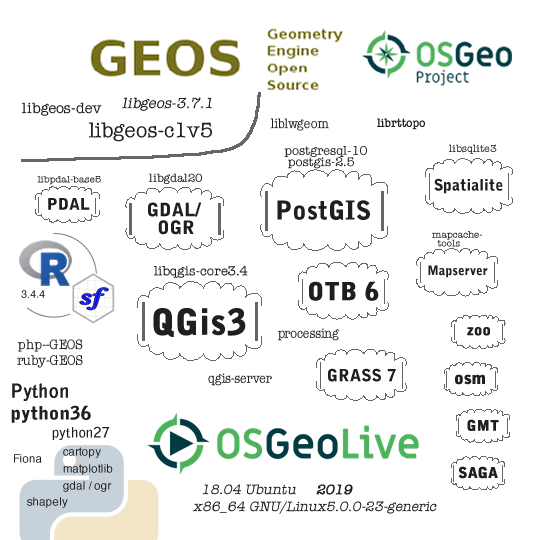

IV Components Inventory

GUI

The sketching GUI will immediately be identified by some as “the product.”

Internal architecture and components are not visible to those without knowledge of software tech.

However, the GUI as written is only one possible interface to the underlying engines, by design.

GUI includes some data, for example, visual style definitions in Geoserver, but does not include base-load and DOCUMENT definitions.

Some GUI-specific data can be repurposed in other implementations e.g. geoserver style definitions.

Framework

The technology mix used to initiate, process and retrieve. The backbone of the project, to which all parts are connected.

DOCUMENTS are data in a certain form. Currently the only practical way to create DOCUMENTS is through the GUI, but a DOCUMENT in the defined form is NOT part of the GUI, but part of the framework.

Engines

Engines are discrete components, and may carry a separate license. An example is a specialized CALC engine that is re-written for performance, or to express a different model. Engines may perform only on base data, only on DOCUMENT data, or both.

Base Load Data

The ability to load base data – “base load scripts” must have a license

The data products must have a license

Documentation

Documentation is a “value add” and can be considered its own component. Docs can be in the form of “help” with context, often embedded, or as a book, to be read, studied, referred to, etc..

Components

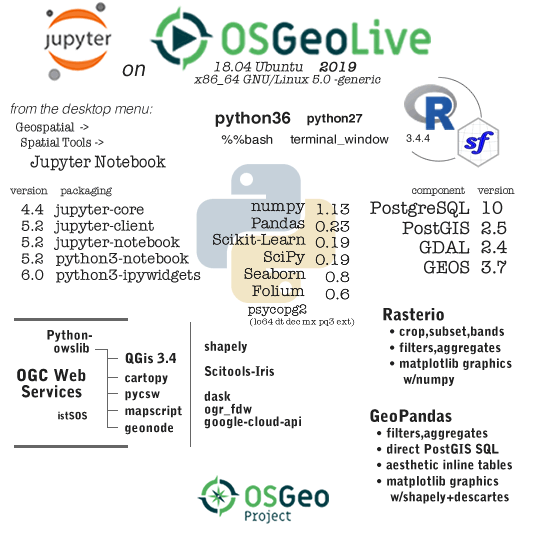

Each of the above are written in programming languages which are different. The most stark example is the Web GUI, written in Javascript and the Django python framework.

The Framework is the collection of python and Postgresql/PostGIS SQL.

Engines are particular, discrete expressions of models,

currently in python and SQL but could be written in even higher performance code.

Base Load scripts.. the ability to take public and private data sets, well defined, and transform them into the particular database tables operated upon by the Framework.

IV Strategy Discussion for Choice of License

Brian’s Thoughts on “Why”

there are several axiis upon which to measure characteristics and likely trajectories of OSS. The following names some of those and asks, what are the goals of the project along these axiis ?

What are the goals of FIRM? It may be said that “if you want to hack, try it out yourself, if you want to initiate a paid study, contact FIRM”

Community versus Poker

Poker is a game where all participants are known to each other as fighting for their own best interests at all times. Paradoxically it does form communities around the activities, but the goals are clearly self-serving. There is mostly “poker” in business relationships. Community on the other hand, implies considerations of group benefits at all times, perhaps expectations of individuals to take losses for the benefit of the whole.

Local government is allegedly a community office. Particularly high quality or enabling software is known to draw community. Science is sometimes termed community, although paradoxically, science is often highly competitive and therfore encourages self-serving decisions.

This project spreads both technical capacity, and brand. Control of the brand, with implied quality, is a crucial consideration in the permissiveness of the modification license. The license may state that derived works MUST NOT carry the original brand, or MUST, there is an argument for either.

Speed of Adoption, Size of Adoption

How many organizations might use this tool set, now or in a few years from now?

At what rates are first contact, first trial and first real usage desirable

or even possible ?

There are well-understood characteristics of the patterns of new product adoption

see Wikipedia on Diffusion of Innovation HERE

Encourage and enable adoption versus hold back powerful competitors

In a networked environment, and a high quality product of specialized interest,

the dynamics change quite a bit. Technical consulting can be very difficult

endeavor, and for the successful, holds importance and money. While keeping a goal of

new comers enabled by tech to do great analysis, the fact is that a small number of

powerful technical consulting groups may find this package useful to compete with the

others, to provide services to those unable or uninterested in doing it themselves.

This package is so enabling, that it could be said to further the state of the art

in the field.

Data Driven Analysis

this environment is data driven, that is, extensive and diverse data is required to

start, and the output is largely data. Quality and quantity of data is immediately an

issue. Is the goal to spread the technology widely, while playing poker with the data?

Is it to distribute the data widely, and play poker with the client relationships ?

There is an unending desire by knowledgeable parties for data, both inputs and outputs. The work to clean, organize and report the data is enabled by the software, but it is not free – resources must come from somewhere.. The Federal, State and County governments are huge sources of raw data, but it is not enough.

What is the desired net effect of the release of this project with respect to the data, and the ability to produce it, integrate new sources, produce new reports? This topic is almost as large as the Framework and GUI itself – consider that the Framework and GUI are not easily written, but far fewer parties would have interest in modifications; while the data is of interest to virtually all who would be interested in this project.

End Notes

The decision to mark software work products with an Open Source license, and how to handle data associated with the project and its use, is complicated and deserves attention. Here we have reviewed a collection of the most popular Open Source licenses. Data must be licensed separately from code, and some attention is shown to emerging data licenses. The motivation to write this is that a full-stack software project is considered for licensing decisions. Customers, community motivations and strategy are briefly discussed. With this short post, several large and different approaches can be seen, compared and contrasted.

references

https://code.gov/about/overview/introduction

EU eGovernment Services OSS-procurement-guideline HERE

UM FOSS GIS University of Massachusetts, Amherst 2007